Mercenary State

Comments Off on Mercenary StateSouth Africa today is among the world’s foremost players in state and private militarization, producing weapons and exporting expertise for militias and national armed forces. This stems in part from a sprawling domestic security industry: In 2021, personal spending on private security in the country made up a massive $17 billion, or 4.2 percent of total GDP. The South African government itself spent $880 million on private security. Meanwhile, the country boasts one of the strongest armies in Africa, playing a regional “peacekeeping role” and supporting other state militaries in combat against rebels.

These different components contribute to South Africa’s “securitization complex,” established during apartheid and composed of arms companies, mercenary groups, the military, and contracted security expertise. Over seven decades, the South African apartheid state’s security industry quashed local uprisings and decolonial struggles, backed its fellow apartheid regimes, and dismantled the supposed Black and communist threats of the “swart gevaar” and “rooi gevaar.” The apartheid-era South African army, the South African Defense Force (SADF), employed its forces to destabilize liberation movements in Angola and Mozambique through the twenty-three-year-long “Border Wars” with Namibia, Zambia, and Angola. The veterans of this era have now entered different privatized security companies based in South Africa, which operate throughout the region.

Today, one of apartheid’s greatest remnants is this vast securitization complex. Despite the declining presence of the South African military in domestic life, militarization has been “exported” through a privatized network of military services and weapons sales for a host of different clients, including states, multinational corporations, and other armed groups. The reach of these industries has spread beyond the African continent, implicating geopolitical warfare across the world.

“Corporate warriors’

For most of the twentieth century, the SADF enforced the apartheid state’s laws, making it infamous for its use of brute force, live fire against civilians, and other crimes against humanity. From 1967 to 1993, it was compulsory for young white men to complete nine months of military service, leading over 600,000 soldiers to serve in the army during that period. During the “Border Wars,” the SADF was deployed to destabilize other newly independent southern African countries.1 Later, the SADF occupied Namibia where it waged war against the local liberation movement. But following the end of apartheid in 1994, the army evolved into the South African National Defense Force (SANDF). The post-apartheid force sought to integrate troops from the SADF and guerrilla forces associated with the African National Congress, Umkhonto we Sizwe, the Pan Africanist Congress, and the Inkatha Freedom Party. The SANDF abandoned the conscription policy and now employs 70,000 service members, a drastic reduction from its earlier numbers.

The apartheid-era government’s engagement with dirty conflicts beyond state borders, alongside the transformation from the SADF to the SANDF, spurred the rise of a major mercenary and private security industry that spilled into the rest of the continent. With the widespread unemployment of SADF soldiers and South African police officers, investors with financial links to the apartheid regime began supporting small security companies. Former soldiers represented a perfect security contingent for a wide range of services: protecting South African assets in Africa, other African states’ assets, and corporate holdings—typically oil fields and extractive projects—and offering private security for politicians, wealthy elites, and the growing numbers of the South African middle class living in gated communities.

As Peter Warren Singer, a foreign policy analyst and former US military consultant, describes in his 2010 book Corporate Warriors, recruitment was easy. Private security offered well-paid jobs to soldiers with a wide range of experience in regional combat and domestic security. Singer notes that almost 60,000 soldiers left the SADF after 1994; those newly unemployed workers lacked experience in any other field and sought to regain the prestige they had lost under the transitional government. Private security companies paid significantly more than any government position, with salaries ranging from $2,000 to $13,000 per month in US dollars. The soldiers included white and Black South Africans, Namibians, and Angolans, though most high-level employees were white.2

Today, as South Africa maintains one of the highest violent-crime rates in the world, it also hosts a robust security industry which makes up a significant portion of domestic employment and GDP. According to the Private Security Industry Regulatory Authority (PSiRA), a state-mandated regulatory body for the private security sector, by March 2024, the country had 2.9 million private security officers and 20,709 registered private security companies, compared to 150,000 police officers.3

South Africa’s security businesses, sometimes known as “consultancies,” were established by former pre-democracy high-level police and army personnel. These businesses have been trusted for their “knowledge” and “technical expertise,” partially gained through their role in quelling anti-apartheid uprisings over years. Former South African army generals and police have set up joint companies with politicians from Mozambique and Angola, and South Africans commonly serve as consultants to private security companies in the region.

The most notorious South African private military company was apartheid army general Eeben Barlow’s Executive Outcomes (EO). Created in 1989 during the decline of apartheid, EO recruited fighters who left the SADF after the Border Wars and those from the ANC military wing uMkhonto Wesizwe, before it was officially dissolved at the end of 1998.4

During the 1990s, EO was stationed in Kenya and the Democratic Republic of Congo (then-Zaire). Some observers called EO, “a private Pan-African peace-keeping force of a kind which the international community has long promised, but failed to deliver.” In his book, Singer praises EO as “a true innovator in the overall privatized military industry, providing the blueprint for how effective and lucrative the market of forces-for-hire can be.”5

EO offered five key services: strategic and tactical military advisory services; an array of sophisticated military training packages in land, sea, and air warfare; peacekeeping or “persuasion” services; advice to armed forces on weapons selection and acquisition; and paramilitary services. Training packages covered the entire realm of military operations, including everything from basic infantry training and armored warfare specialties to parachute operations.6 The company managed to enter into business with African governments who had supported the ANC during the anti-apartheid movement. EO was able to insert itself among elite governmental networks and obfuscate the payments of bribes through offshore shell companies. They offered political leaders a combination of weapons, bribes, and power, blending South Africa’s historical technical weapons expertise and technology with the colonial legacy of corruption that still plagues the continent.

Although EO was disbanded in 1998, the company created a model for hundreds of other private security and mercenary groups in South Africa to follow. These burgeoning organizations would be dedicated to protecting oil and gas assets, mining sites, and working with local soldiers. Today, former EO mercenaries continue to be scattered around the world well after the company’s dissolution, reportedly found in combat in Libya, working under the US army and Blackwater in Iraq, and partaking in the attempted coup in Equatorial Guinea in 2005.

While private security groups in South Africa have flourished since the fall of apartheid, the SANDF has suffered from weakened power and a tarnished reputation. Though its apartheid-era ancestor played a major role in performing the tasks of domestic social control, the SANDF is largely invisible in daily South African life today. At the domestic level, the force is mostly relegated to army bases or brought out to police neighborhoods during intense bouts of gang violence. But at the international level, the SANDF continues to be a major actor, fighting other rebel groups and securing the assets of extractive industries essential to both the South African state and multinational corporations.

Weapons trade

The post-apartheid securitization complex has evolved to include many interrelated sectors and ambitions, but it is in weapons manufacturing where we can discern the roots of a national defense and security sector. Present-day weapons development in South Africa draws on the legacy of the apartheid state-owned industry. In 1961, after Pretoria left the Commonwealth, it lost the bulk of its access to British weapons. This shift, alongside the voluntary United Nations arms embargo on the apartheid state in 1963, meant that the country could no longer rely on foreign states to purchase arms.

In 1968, the government created the Armaments Corporation of South Africa (Armscor) to ensure a domestic weapons supply under the embargo. However, domestic production relied on technological transfers and assistance from other countries, which would violate the terms of the embargo. An illegal network was built around facilitating the flow of weapons to and from apartheid South Africa, both for international combat but also for urban warfare and domestic repression within and outside its borders.

South Africa and its trade partners also sought to test their arms. Israel and South Africa, for example, conducted joint missile tests on South African soil and regularly co-developed weapons to be used by both states during this period. Since the mid-twentieth century, South Africa has remained a popular location for the development of new arms. Through Armscor, which also doubled as a procurement arm, South Africa became a key producer of armoured vehicles for domestic warfare. The weapons industry was crucial to the survival of the apartheid government, allowing it to suppress local uprisings and pursue wars with Angola and Namibia.

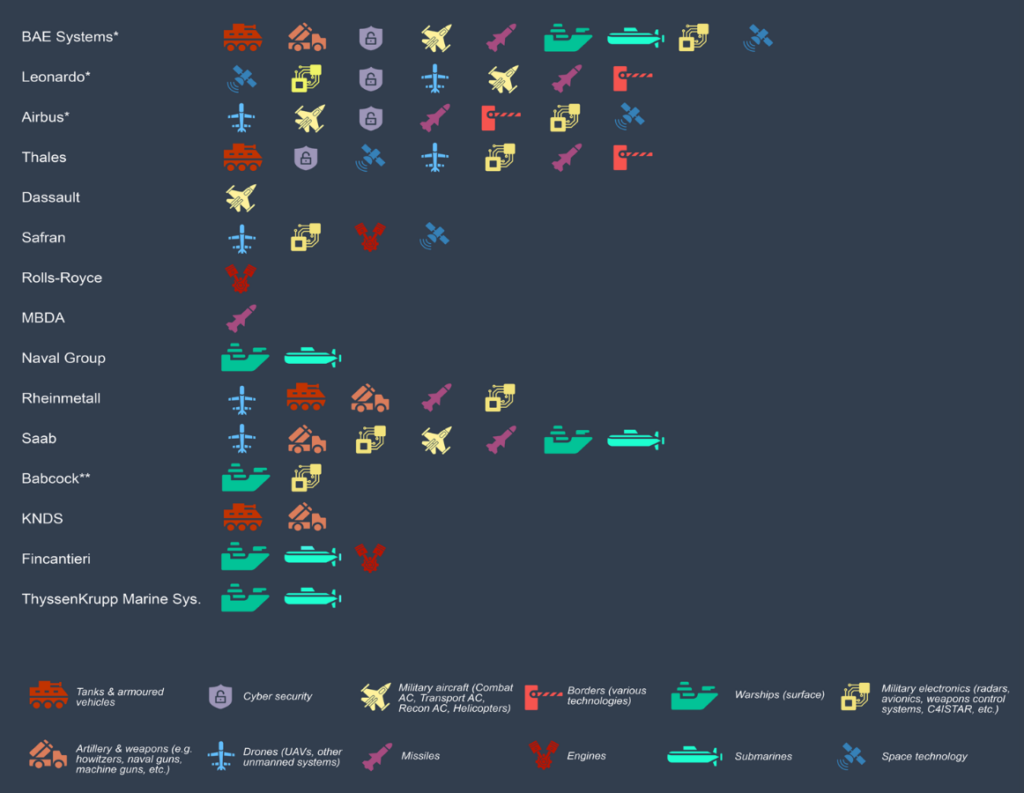

In 1992, halfway between the official end of apartheid and the first independent elections, the state-owned Armscor split into two entities—Denel, now the manufacturing arm of the state weapons industry, and Armscor—the procurement and weapons testing entity for the SANDF. For years, Denel maintained a strong relationship with European weapons giants, including Germany’s Rheinmettal. Present-day Denel, however, is a shell of its former self, having received government bailouts of $500 million in the last five years just to stay afloat. In 2024, although the government allocated $78 million to Armscor while refusing new funding to Denel, it still made it clear that the company would not collapse. Following the end of apartheid, Denel and Rheinmettal established a joint venture, Rheinmettal Denel Munitions (RDM), with the German manufacturer taking 51 percent ownership. Denel, RDM, and forty-seven other South African defense companies have clients on every continent. Denel was one of the first producers of the popular G5 155mm howitzer, a weapon used operationally in several conflicts, such as the Angolan Civil War and the Iran-Iraq War.

South African companies have also increased their investment in factories for munitions manufacturing. Most recently, in January 2023, RDM announced it would be building an ammunition-manufacturing factory for the Hungarian state in Várpalota. In its statement, RDM says the contract covers the supply of plant engineering, technology, and process know-how, and the associated documentation, training, and all activities necessary to achieve full-scale production. RDM has established ammunition-manufacturing plants in thirty-six countries over the last thirty years, with the most recent customers being Saudi Arabia and Egypt. South African accents are thus commonly heard at arms manufacturing companies around the world.

Despite a perceived decline in South African weapons expertise following the end of apartheid, weapons manufacturers continue to hold sway in southern African politics. In 2013, the Telegraph found that the head of the South Africa Paramount Group, Africa’s largest private defense and aerospace company, had persuaded then-Malawian President Joyce Banda to purchase $145 million worth of arms in exchange for public relations and media support for her political campaign.7

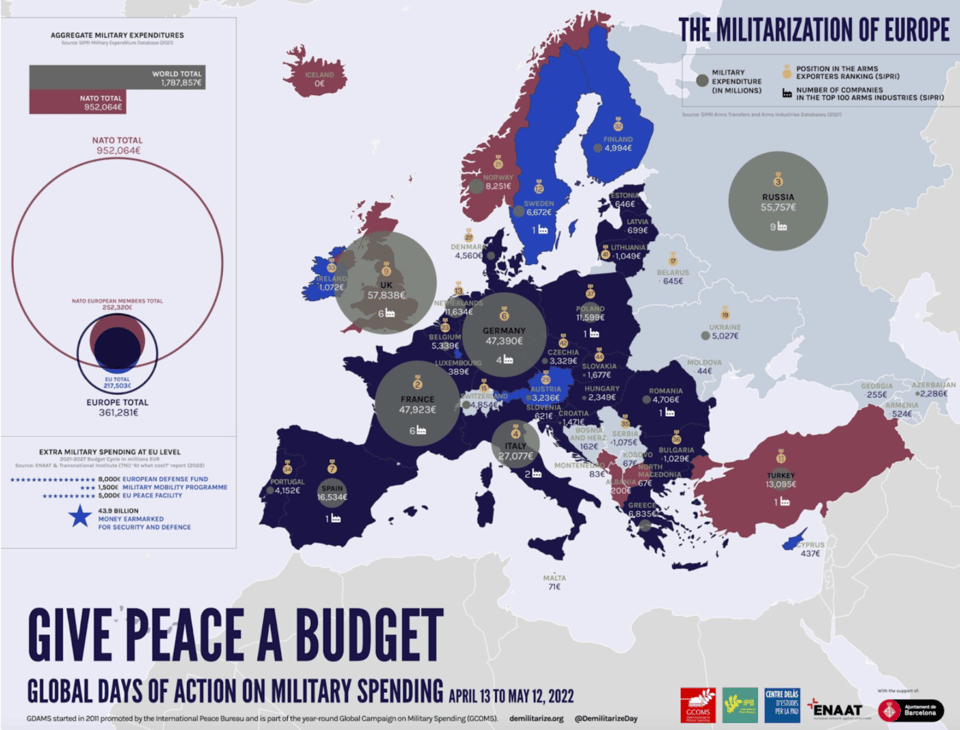

The dominance of the securitization complex in South African politics becomes clear when scrutinizing the absence of effective domestic controls on weapons exports. In 2023, the National Conventional Arms Control Committee (NCACC), the South African state body that controls weapons licensing, reported that the country exported $2.3 billion worth of munitions to fifty-nine countries. The majority of South African weapons are sent to Europe: In 2023, Hungary and Germany accounted for 24 and 26 percent of total arms exports, while 20 percent were exported to African countries, and 8 percent were exported to the Middle East.

Israel was South Africa’s largest weapons importer from 1977 to 1987, when the weapons embargo on South Africa’s apartheid state and US pressure forced Israel to sanction South Africa. After the democratic transition, in 2004 the NCACC implemented a ban on weapons sales to Israel, but this policy has been easy to circumvent. Although the NCACC requires importers to ask approval for further sales of South African weapons through an end-user certificate (EUC), its process relies on good faith. South African-produced 155mm howitzers are sold to Germany, Israel’s second-largest weapons supplier, and potentially make their way to Israel without South African approval. The NCACC is also responsible for enforcing South Africa’s mercenary laws: any South African citizen wishing to fight in another country’s army must receive NCACC approval. Still, for decades, South African nationals have fought in the Israeli Defense Forces with few repercussions.

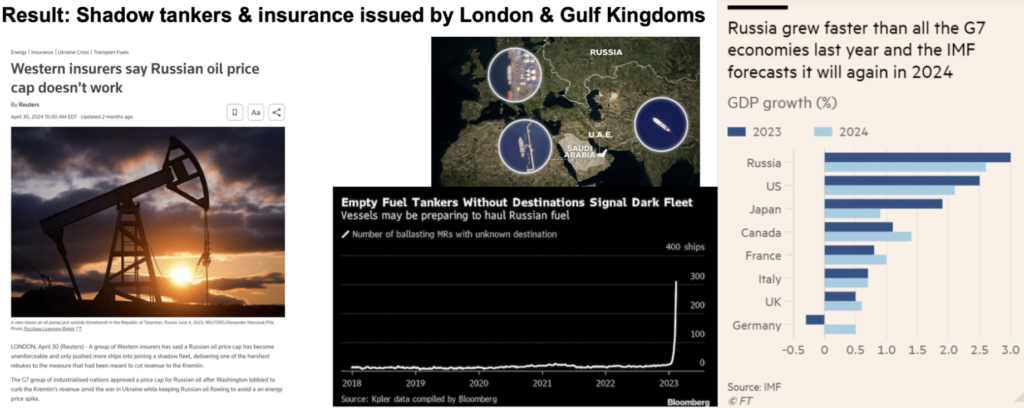

The NCACC itself is subject to industry and geopolitical pressures. In 2021, after Open Secrets questioned the NCACC on whether or not South African weapons sold to Saudi Arabia had been used in Yemen, the NCACC responded that the matter was “none of its concern.” Saudi Arabia, the United Arab Emirates, and the domestic weapons industry all disapproved when the NCACC inserted a clause into the EUC allowing for on-site inspections for exports of Saudi Arabian weapons to Yemen, forcing the NCACC to repeal the change.8 On the global stage, South Africa’s rhetoric of “peacekeeping” is undermined by the absence of effective enforcement mechanisms in the arms trade. This contradiction has gained more attention in the wake of the country’s high-profile case against Israel in the International Court of Justice.

Exported militarization

Today, South Africa’s public and private sector militarization is increasingly linked to an extractivist economic model. South Africa’s own energy crisis—marked by frequent and extended blackouts and load-shedding across the country—has heightened the need for external, reliable energy sources. South African companies, including Sasol, Exxaro, and Kumba Iron Ore, hold significant investments in Mozambique’s natural gas and coal sectors. Eskom, South Africa’s state-owned electricity company, imports electricity from the Cahora Bassa hydroelectric dam in Mozambique. Protecting these assets has become the primary objective of both SANDF and private South African security forces.

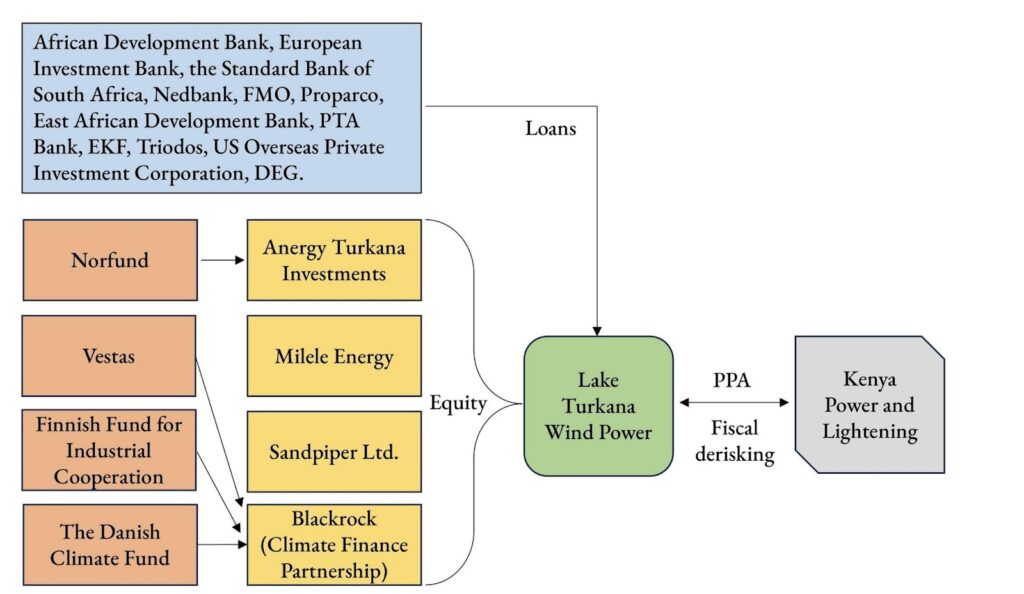

From July 2021 to July 2023, the SANDF was stationed in the Cabo Delgado province as part of the Southern African Development Community Mission in Mozambique (SAMIM), a regional peacekeeping mission set up by the Southern African Development Community (SADC). The force was sent to protect three major gas projects in the Cabo Delgado—the largest in Africa—valued at a total of $50 billion: the Mozambique Liquefied Natural Gas project (Mozambique LNG) led by TotalEnergies; Coral South Floating Liquefied Natural Gas led by Eni and ExxonMobil; and Rovuma LNG led by Eni, ExxonMobil, and the China National Petroleum Corporation. Through the Industrial Development Corporation, the Development Bank of Southern Africa, and the Export Credit Insurance Corporation of South Africa (ECIC), the South African government heavily invested in the projects. The ECIC provided Mozambique LNG with funding of $1.2 billion, and several private South African companies won smaller contracts, with major logistics enterprise Grindrod contracted for building ports and transportation.

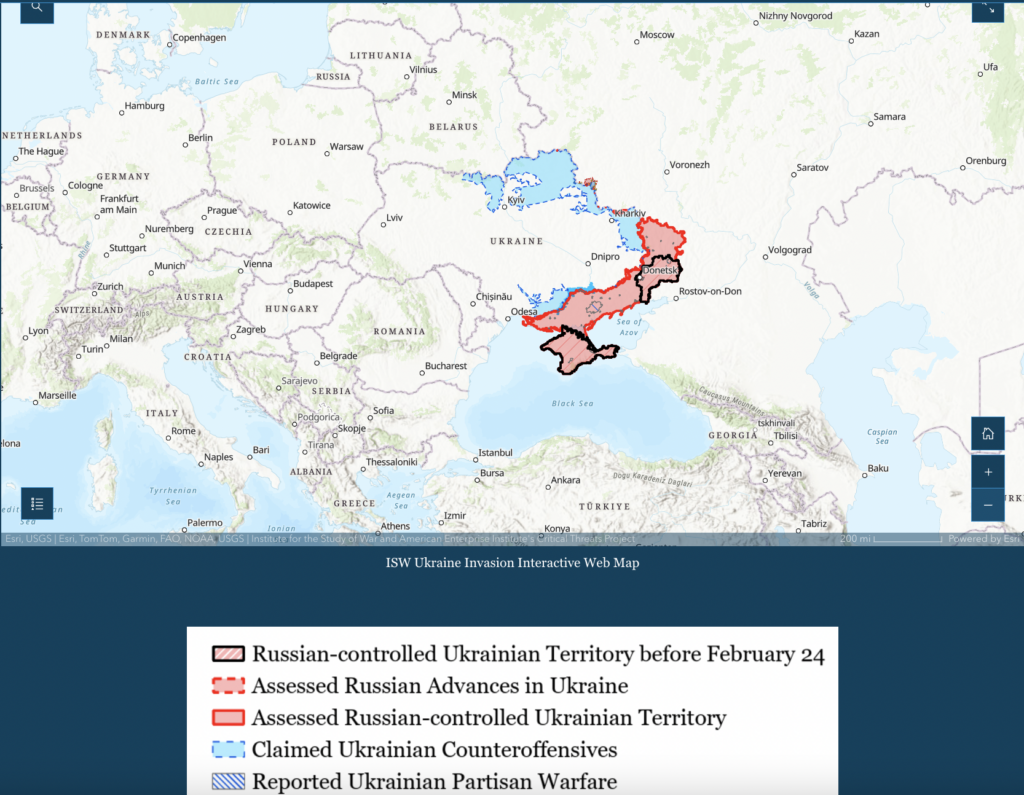

Insurgent attacks on Cabo Delgado security personnel and communities surrounding the Afungi Park began in 2017, sparking a war between the insurgents, the Mozambican and Rwandan militaries, SAMIM, and international private security and mercenary groups, including the notorious Russian Wagner Group. SAMIM, which brought together soldiers from Angola, Botswana, Democratic Republic of Congo, Lesotho, Malawi, South Africa, Tanzania, and Zambia, ultimately was a failure. The mission faced allegations of human rights violations, with Human Rights Watch reporting that soldiers sexually assaulted civilians and mistreated and mutilated the dead. At the same time, insurgent attacks continued to intensify. Thousands were killed and over one million were displaced. In May of last year, SAMIM officially announced their withdrawal from the region.

The Mozambican government also hired the Dyck Advisory Group, a South African private security company previously accused of human rights violations, for security assistance in Cabo Delgado. Following a combat operation, fifty-three witnesses told Amnesty International that Dyck operatives fired at civilian infrastructure, including hospitals, schools and homes, and indiscriminately fired machine guns from helicopters and dropped hand grenades into crowds of people. While the Mozambican government quietly decided against renewing the Dyck Advisory Group’s contract, the group was never held accountable for its crimes. Officially labelled as a private security company, the company functioned as a mercenary group—troops for hire by the government, protecting strategic assets in a model of “exported militarization.”

Outside of Mozambique, 2,900 SANDF soldiers today remain stationed in Kivu province in the Democratic Republic of Congo as part of the SADC mission targeting M23 rebels. Kivu is home to South African mining companies MPC Mining and Alphamin Bisie Mining, both accused by local communities of promoting landgrabs and causing displacement. In 2023, the mining industry contributed 7.3 percent—$11 billion—to South Africa’s GDP.

Conflicts around these sites of extraction have resurrected South Africa’s role in quelling protest and defeating counterinsurgency. Today, in place of a focus on domestic uprising, South Africa’s securitization complex consists of a booming domestic private security industry paired with an exported model of militarization centered around weapons trade and military service provision. This complex maintains strong ties to political elites and vested economic interests, while also claiming a significant contribution to South Africa’s national income and employment. The result is a disturbing continuity between the democratic state and its apartheid predecessor. While the image of South African militarization has changed, a powerful securitization complex remains fundamental to the state’s reproduction.